Model Calibration Tests

Before we consider how to test a model to see it its calibrated, let's first find out what calibration is and why it's import. In the context of machine learning, a model is calibrated if the model predicts x% chance of something, then that something actually happens x% of the time.

To make things a bit more concrete, for each prediction the model says positive class with 90% confidence, then for all those positive predictions with 90% confidence, 90% of them actually are positive.

The sigmoid or softmax functions don't actually predict probabilities. They merely predict numbers that for all classes, sum up to one. If we want the model scores coming out of sigmoid or softmax functions to be truly probabilistic, then we need our models to be calibrated.

This is important in cases where probabilities matter, in medical settings or ad click prediction for example. If a model predicts for a group of users that 10% of them will click on ads but only 4% of them actually do, then you generate far less revenue than expected. Credit to Chip Huyen for this example [1].

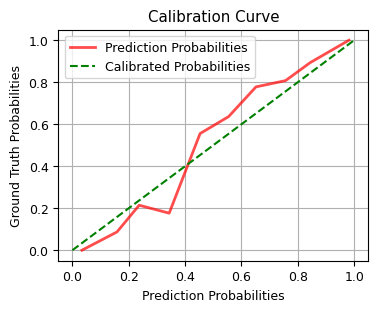

For our model we will plot the ideal calibrated curve (the green straight line) against our model predictions. We see that our model is not quite as probabilistic as a properly calibrated one.

References

[1] Huyen, Chip (2022). Designing Machine Learning Systems