Model Fairness and Invariance Tests

Models can often carry all sorts of biases such as race, gender, ethnicity, age or other legally protected classes. We want our models to be fair and treat all groups and individuals fairly.

In this post, we will use an invariance test to see how our model performs when the protected gender class changes. Invariance tests measure model performance on features that ideally should not impact the model's outputs. For example in loan prediction, if we flip the race class for a data point, it should not change the prediction [1].

For our example, we'll take the gender column in our data and flip all values to "female". Meaning we pretend that only females are present in our data set and we'll compare the model's performance against the baseline which is the entire dataset.

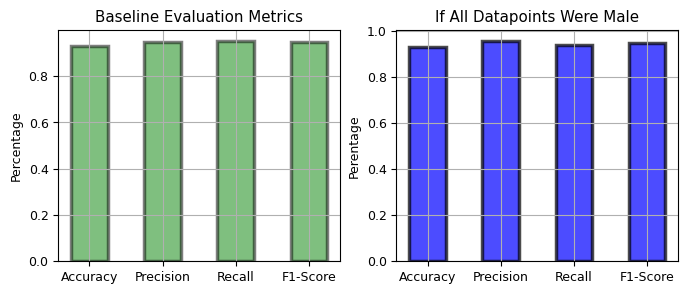

We see that precision increases slightly, while recall drops quite a bit. Let's try again but this time we flip the gender column to be all males.

We see something similar but recall doesn't drop as much for males. Recall measures for all positive (exam passed) cases, what percentage the model actually predicted positive. Depending on the situation, we may want to investigate this further.

References

[1] Huyen, Chip (2022). Designing Machine Learning Systems