Feature Importance and Attribution

If a model makes a certain prediction, one can ask why the model made that prediction? Or which features had the most impact on the model's prediction? Since most of the powerful machine learning models are not inherently interpretable, we turn to Shapley values to answer these questions.

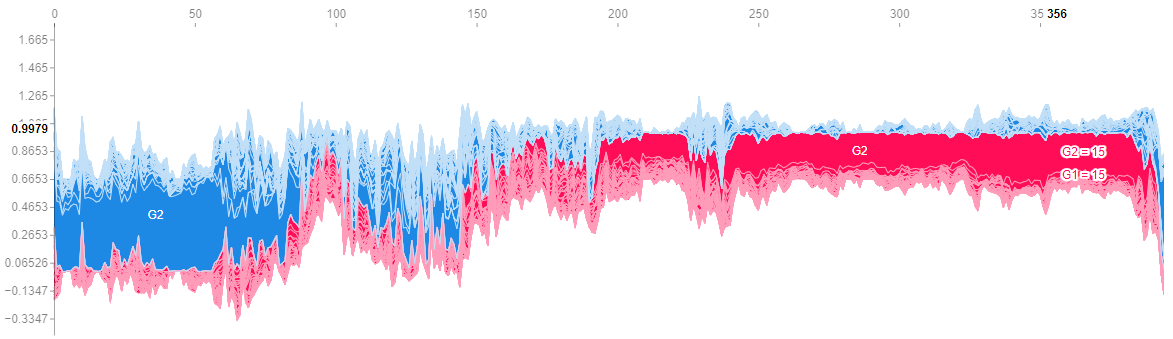

For our example we will plot the Shapley values to learn which features are the most important.

The feature "G2" (grades on the second exam) appears by far to be more significant than any other feature on both sides of the prediction (pass or fail).

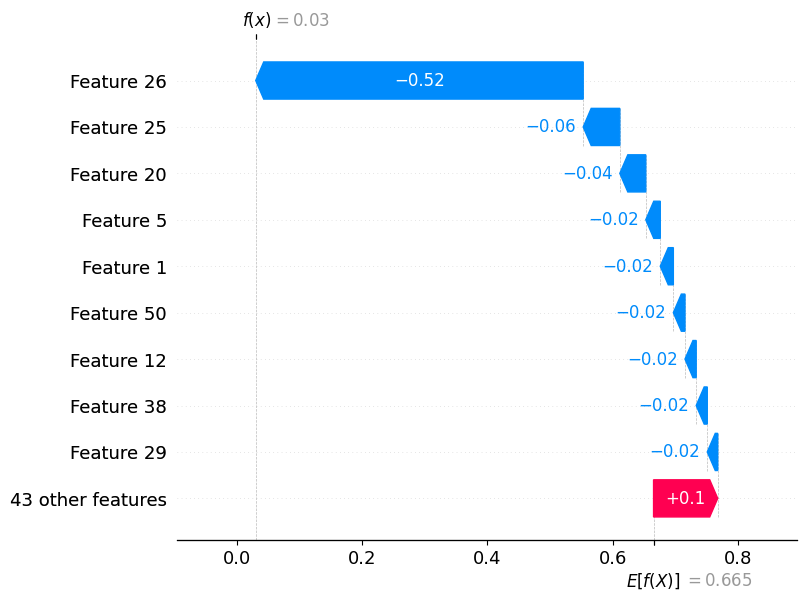

Let's have a look at the waterfall plot to see things a bit more clearly.

Feature 26 is "G2" and feature 25 is "G1", these are the grades on exam 2 and exam 1 respectively. This is quite interesting as these plots tell us that by far the most important factor for predicting performance on the next exam is the performance on the previous exam. Features such as study hours or time spent with friends have almost no impact on the model's predictions.