A (small) Causal Large Language Model from Scratch in Tensorflow to Auto Generate Python Code

Arslan Ashraf

In this project, we will explore a large language model, its various components, and how it's built. Machine learning models do computation and therefore operate on numbers. So we need a way to take strings and find a numerical representation for them.

In general, there are multiple stages of training large language models including self-supervised pretraining, supervised fine-tuning on often high quality hand labeled data, reinforcement learning with human feedback and perhaps others.

We will only focus on the self-supervised pretraining phase. Before we even begin training LLMs, we need to convert text to numbers. This process is done through tokenization.

Data

The LLM that we're trying to build here, is intended to generate Python code. So we need lots of Python code data. Fortunately, this dataset is openly available on HuggingFace [1].

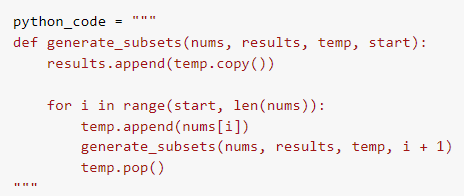

An example of Python code is:

Tokenization

Next, we need to tokenize the Python code. Tokenizers are complex algorithms with various tradeoffs. In this project, we will use a prebuilt tokenizer that is specifically adapted for code generation purposes. The tokenizer we will use is the tokenizer for the LLM StarCoder 2 - 3B [2]. This tokenizer has a vocabulary size of 49152.

Passing the Python code in the image above generates the following list of tokens:

['Ċ', 'def', 'Ġgenerate', '_', 'sub', 'sets', '(', 'nums', ',', 'Ġresults', ',', 'Ġtemp', ',', 'Ġstart', '):', 'ĊĠĠĠ', 'Ġresults', '.', 'append', '(', 'temp', '.', 'copy', '())', 'ĊĠĠĠĠĊĠĠĠ', 'Ġfor', 'Ġi', 'Ġin', 'Ġrange', '(', 'start', ',', 'Ġlen', '(', 'nums', ')):', 'ĊĠĠĠĠĠĠĠ', 'Ġtemp', '.', 'append', '(', 'nums', '[', 'i', '])', 'ĊĠĠĠĠĠĠĠ', 'Ġgenerate', '_', 'sub', 'sets', '(', 'nums', ',', 'Ġresults', ',', 'Ġtemp', ',', 'Ġi', 'Ġ+', 'Ġ', '1', ')', 'ĊĠĠĠĠĠĠĠ', 'Ġtemp', '.', 'pop', '()', 'Ċ']

These tokens map to the following numbers which get passed into the model's embedding layer:

[222, 610, 4468, 100, 1133, 2047, 45, 14558, 49, 3300, 49, 1798, 49, 1496, 731, 303, 3300, 51, 1713, 45, 1452, 51, 3014, 1177, 2205, 456, 613, 347, 2189, 45, 1384, 49, 2095, 45, 14558, 8485, 310, 1798, 51, 1713, 45, 14558, 96, 110, 1156, 310, 4468, 100, 1133, 2047, 45, 14558, 49, 3300, 49, 1798, 49, 613, 494, 244, 54, 46, 310, 1798, 51, 3254, 365, 222]

Once the data has been tokenized, we will split the data into smaller roughly 100MB files and save them.

Model

While the transformer model is the standard LLM architecture, once again there are various design choices for which type of transformer should we implement. For our code generation purposes, the transformer decoder model seems appropriate.

We will train the model to perform causal inference in the form of next token prediction. For example, if the input tokens look as below:

[40, 8571, 10633, 63, 6471, 50, 61, 8571, 222, 1097]

then the target tokens are shifted to the left by one as below:

[8571, 10633, 63, 6471, 50, 61, 8571, 222, 1097, 38180]

For each input token, the model tries to predict the corresponding next token. For example, for input token at position 0 which is token 40, the model tries to predict the target token at position 0 which is 8571 as listed above.

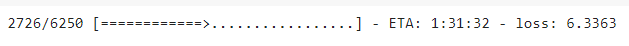

We will code this model in Tensorflow, completely from scratch and attempt to train it on Google Colab's GPU. The image below shows model training successfully beginning. Unfortunately, we will stop here, as there isn't enough compute power freely available to properly train this model and training large language models is very expensive.

References

[1] https://huggingface.co/datasets/codeparrot/codeparrot-clean

[2] https://huggingface.co/bigcode/starcoder2-3b