How to Rigorously Evaluate an ML Model

Arslan Ashraf

April 2024

A high performing and robust machine learning model can generate a lot value. But the question is, how can we really know if our model's performance in development and production is trustworthy? We will explore an answer to this question under the context of an elementary binary classifier.

The performance of a model is nowhere near as simple as the metrics used to calculate the loss function. Machine learning models can be very finicky, susceptible to various problems such as training/serving skew, data/model drift, ethical unfairness, degenerate feedback loops, weaknesses in predicting outliers or underrepresented groups, and adversarial attacks just to name a few.

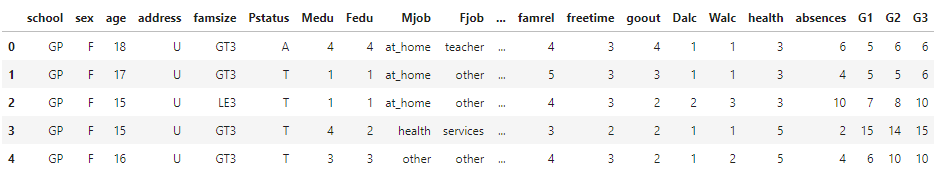

To set the context, we will consider a trivial student dataset, that contains features such as the student's gender, age, parental education and occupations, study hours, as well as grades on two exams. The task is to predict whether the student will pass or fail their final class exam.

To examine our binary classifier, we will consider various tests to assess the strengths, weaknesses, and overall performance of the model. The list below includes some of the tests that we will consider.

- Standard classification tests (precision/recall/f-1 score)

- Understanding the cost of a decision threshold

- Model calibration

- Weakness in data and/or model

- Model fairness and invariance tests

- Data slice tests

- Directional tests

- Feature importance/attribution

Note that this list is far from exhaustive. There are various other tests that we have not considered, such as robustness to adversarial attacks, counterfactuals or degenerate feedback loops where model predictions generate future (potentially faulty) training data. For additional examples, AWS Sagemaker lists a whole host of model fairness metrics [1], which may be important depending on the use case.

References

[1] https://docs.aws.amazon.com/sagemaker/latest/dg/clarify-measure-post-training-bias.html